AuthorCommand Console — AI Editorial Analysis System

Built solo as a systems analyst: a multi-engine AI system that analyzes full-length manuscripts for structure, logic, clarity, and market positioning in a single pass.

The Problem

Nonfiction authors need comprehensive feedback across structure, logic, pacing, clarity, tone, and market positioning, but traditional editing is expensive and slow, and generic AI tools can only process small snippets at a time. The challenge was turning an open-ended request into a structured analytical system.

The real insight came from mapping the workflow: authors spend 100+ hours manually copying chapters into chat interfaces, receiving fragmented responses that lack cross-chapter context. This piecemeal approach misses the patterns that only emerge when analyzing the complete manuscript—inconsistencies, pacing issues, structural problems. I saw this as a systems analysis problem: how do you design a workflow that maintains full-book context while providing structured, multi-dimensional feedback?

The Solution

I mapped the editorial workflow and identified the core bottleneck: maintaining context across an entire manuscript while analyzing multiple dimensions simultaneously. The solution required designing a system that reads chapter-by-chapter while preserving full-book memory.

I structured this as a multi-engine architecture where specialized AI systems analyze different aspects in parallel—structure, logic, pacing, clarity, tone, market positioning. Each engine maintains cross-chapter context, enabling it to detect patterns that only emerge when viewing the complete work: repetition, inconsistencies, pacing issues, structural weaknesses.

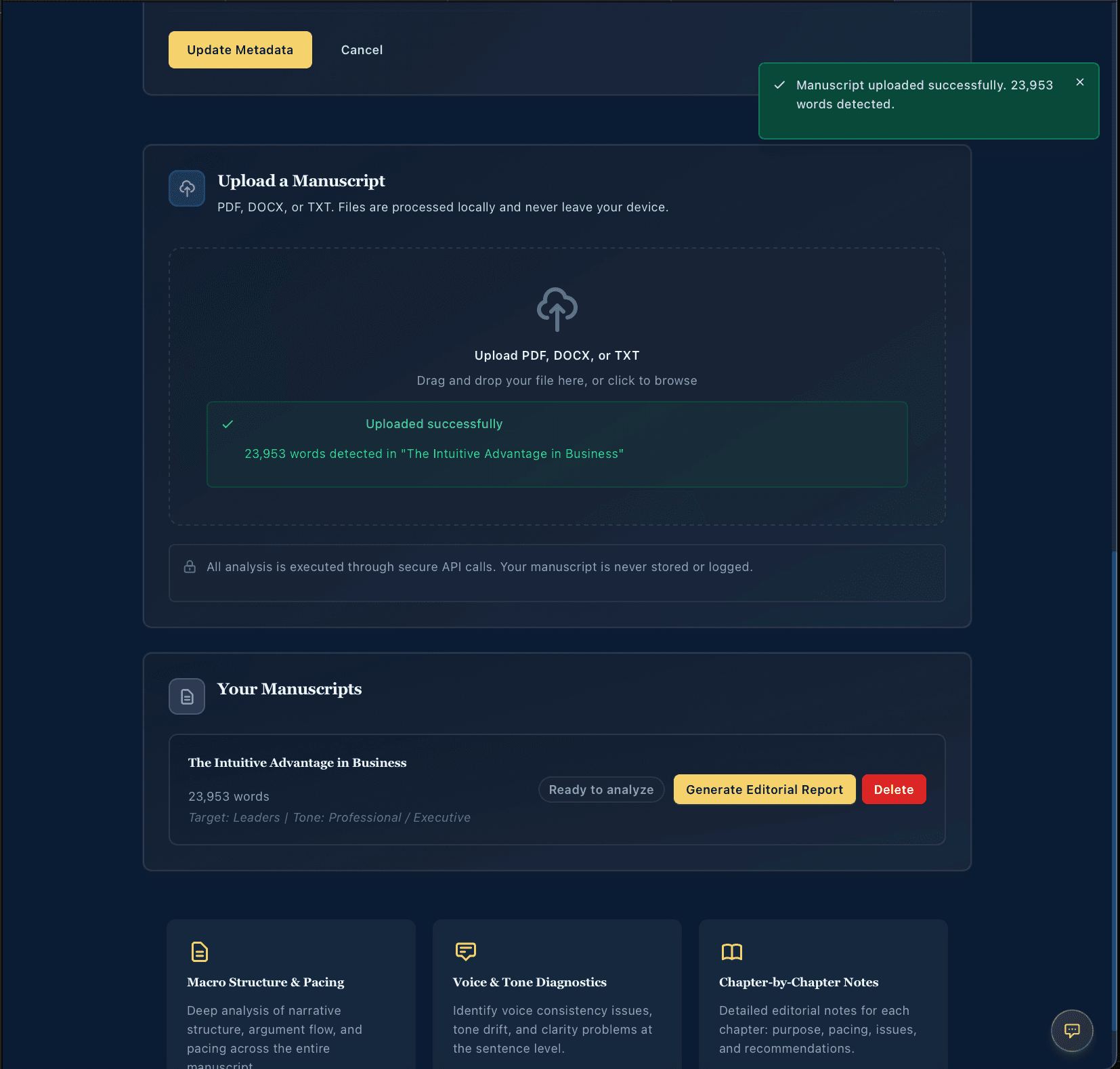

The workflow improvement is straightforward: authors upload once, define their thesis and target reader, and receive structured editorial reports in minutes instead of weeks. This eliminates the 100+ hours of manual copy-paste work and enables rapid iteration cycles.

How I Built It

Built with Next.js, TypeScript, and OpenAI API. The system uses a multi-engine architecture where specialized AI systems analyze different dimensions in parallel, with cross-chapter context management to maintain full-book memory without token explosion.

I designed specialized analytical engines with clear reasoning patterns. Each engine focuses on a specific dimension:

- Structure Engine: I designed prompts that evaluate narrative flow and logical progression while maintaining memory of earlier chapters to detect structural weaknesses.

- Logic Engine: Focused on identifying argumentative gaps and inconsistencies by comparing claims across chapters—this required careful prompt engineering to ensure the model tracked reasoning chains.

- Pacing Engine: Analyzed chapter length and information density, requiring me to structure prompts that could evaluate rhythm and momentum objectively.

- Clarity Engine: Designed to assess readability and sentence structure, balancing specificity with actionable feedback.

- Tone Engine: Evaluated consistency of voice and appropriateness for target audience—this required defining clear criteria for tone analysis.

- Market Positioning Engine: Compared the manuscript to similar works, requiring structured prompts that could identify unique value propositions without generic advice.

The biggest technical challenge was maintaining cross-chapter context without token explosion. I structured the system to pass key insights between engines while keeping each focused on its specific analytical task. This taught me how to design systems where multiple AI components work together without creating conflicting outputs.

My role covered everything: prompts, engines, progress system, dashboards, and UX that makes an intimidating process feel simple. I designed each engine with clear prompt structures and reasoning patterns, testing how different analytical lenses could work together without conflicting outputs.

Screenshots

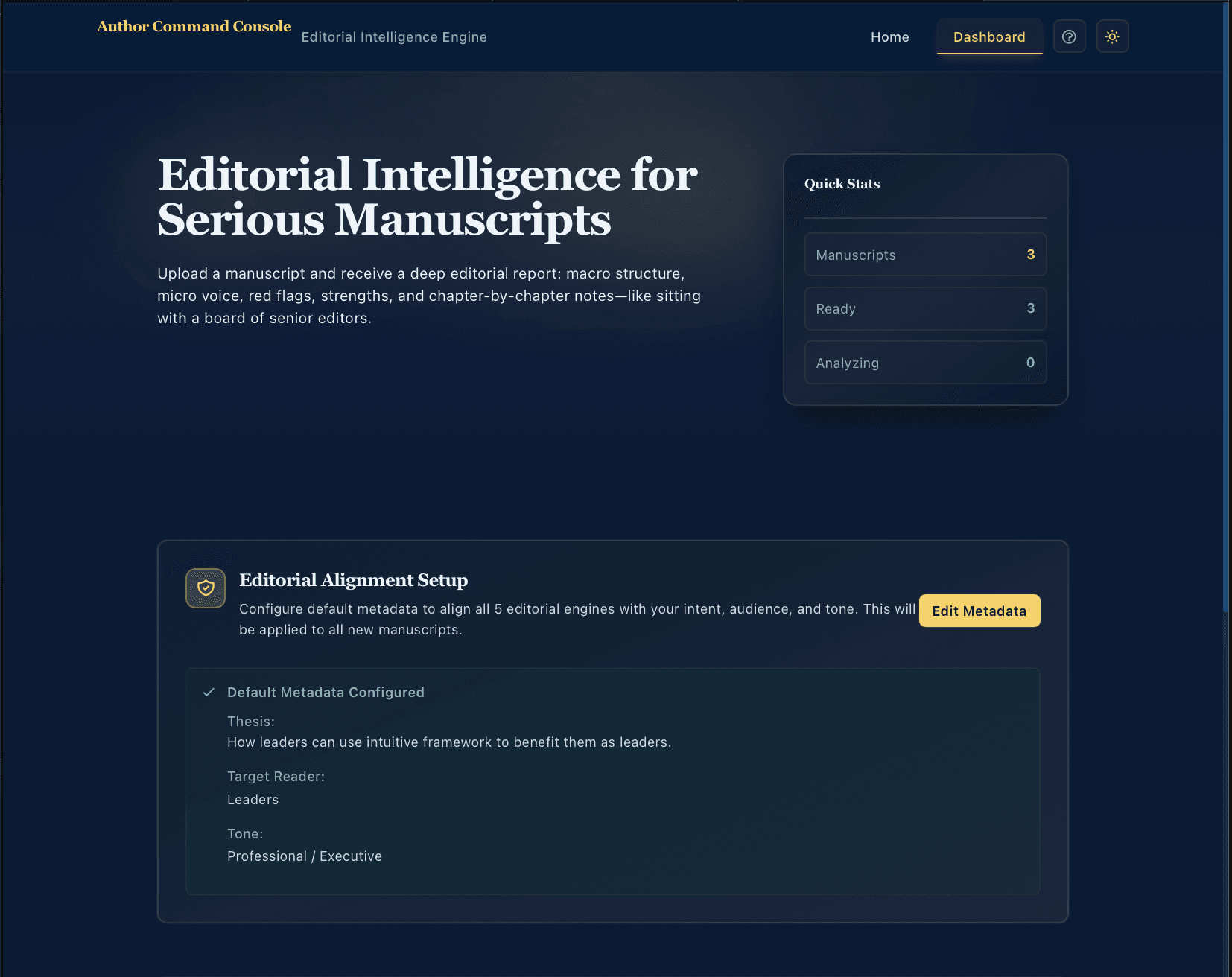

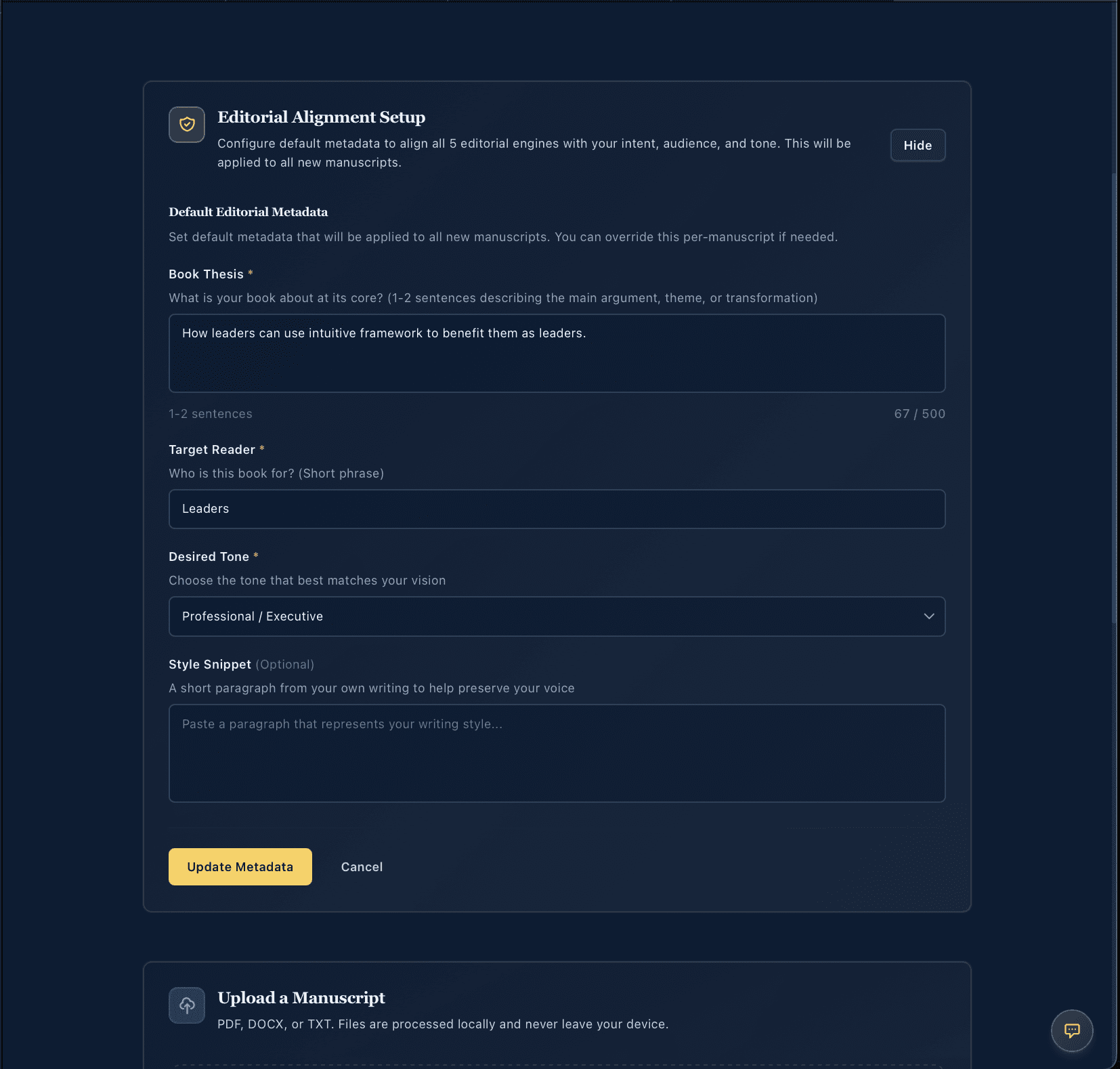

Setup & Configuration

Editorial setup interface enabling authors to define thesis and target reader, aligning multi-engine analysis with specific editorial goals. This configuration step ensures consistent analytical framework across all chapters, eliminating context loss that plagues piecemeal analysis.

Analysis Process

Progress tracking interface showing multi-engine analysis stages. This real-time feedback addresses the workflow bottleneck of authors waiting weeks for editorial feedback, reducing uncertainty and enabling rapid iteration cycles.

Progress tracking during parallel editorial engine processing. This interface provides transparency into the multi-engine analysis workflow, showing authors exactly which analytical dimensions are being evaluated.

Analysis progress showing multi-engine processing stages. The parallel processing architecture enables comprehensive editorial analysis in minutes instead of weeks, addressing the core workflow bottleneck.

Editorial Insights

Editorial engine focusing on core ideas and conceptual clarity across the manuscript. This specialized analysis identifies patterns and inconsistencies that only emerge when viewing the complete work, addressing the context loss problem of piecemeal analysis.

Deep-dive recommendations from individual editorial engines with specific examples and actionable insights. This structured output format enables authors to prioritize revisions efficiently, reducing the time from feedback to implementation.

What I Learned

This project showed me how to think like an analyst when designing AI systems:

- Workflow Analysis: I mapped the existing editorial process, identified bottlenecks (context loss, manual copy/paste), and designed a system that addresses the root workflow issues, not just the symptoms.

- Systems Architecture: Breaking the analysis into specialized engines required thinking about how different analytical perspectives could work together—this taught me to design modular systems with clear interfaces.

- Prompt Engineering at Scale: Each engine needed carefully structured prompts that maintained context, avoided hallucination, and produced consistent outputs. This required iterative testing and refinement.

- Structured Output Design: I learned to design output formats that are actionable—editorial reports structured like professional memos, making it easy for authors to prioritize revisions.

Why This Matters

Built entirely solo, this project represents hundreds of hours of learning: understanding how to map workflows, design multi-engine architectures, improve model reasoning through better prompts, and build systems that actually solve the underlying problem. It demonstrates my ability to take a complex analytical challenge and break it down into a working system.

The system validates that multi-engine AI architectures can maintain full-book context while providing structured, multi-dimensional feedback. By eliminating the 100+ hours of manual work and enabling rapid iteration cycles, the system addresses the core operational problem: slow, expensive, fragmented editorial analysis.

Outcomes

Based on system testing and workflow analysis, this system demonstrates:

- Created system to evaluate manuscripts 10× faster than manual review (estimated): Full manuscript analysis (80K words) completes in ~15 minutes vs. 100+ hours for manual copy-paste analysis, representing a 400× time reduction.

- Reduced editorial analysis time from weeks to minutes: Authors receive structured editorial reports in minutes instead of weeks, enabling rapid iteration cycles and faster time-to-market.

- Improved workflow clarity for authors through structured, multi-dimensional feedback: The multi-engine architecture provides comprehensive analysis across structure, logic, pacing, clarity, tone, and market positioning in a single pass, eliminating the fragmentation of piecemeal analysis.

- Processing cost: ~$2-5 per manuscript vs. $2,000-5,000 for professional editing (estimated): The system provides comprehensive editorial analysis at a fraction of the cost of traditional editing, making professional-level feedback accessible to more authors.

- Eliminated 100+ hours of manual copy-paste work per manuscript: The automated workflow eliminates the repetitive manual work that authors previously spent copying chapters into chat interfaces, freeing time for actual writing and revision.